WonderVision –

Unlocking Freedom for the blind

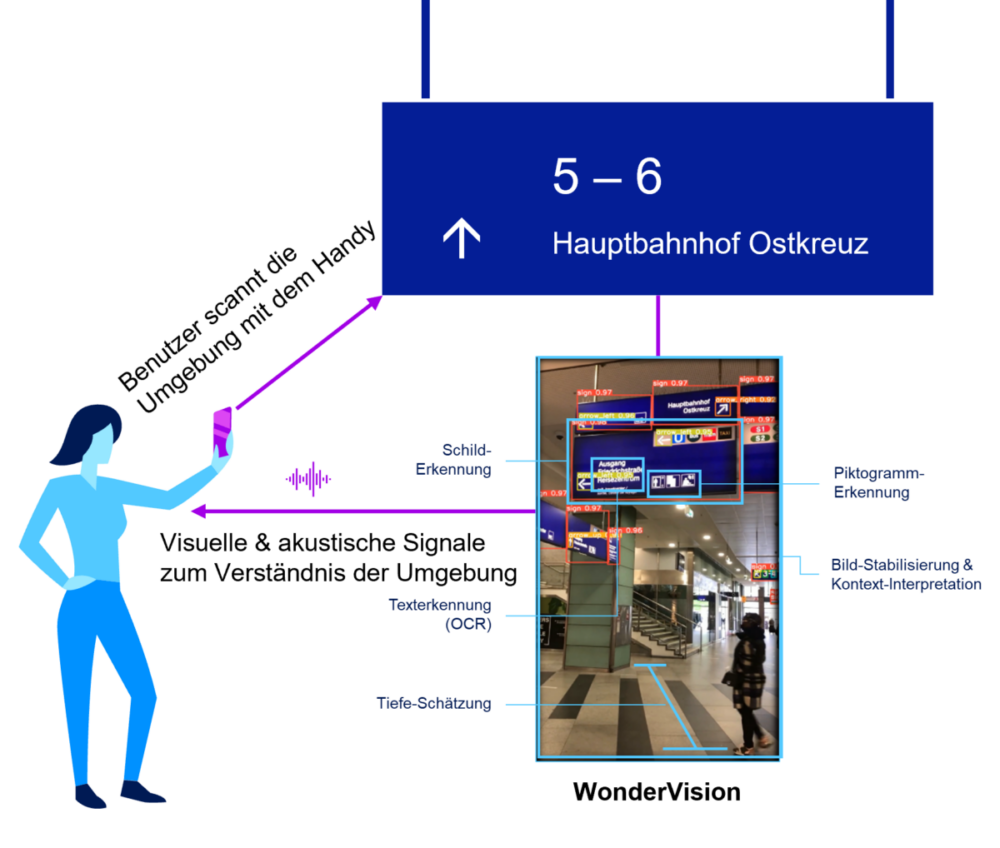

Empowering visually impaired individuals to navigate public spaces independently and confidently, IAV introduces WonderVision, a groundbreaking technology solution that leverages smartphones and computer vision. By addressing the growing need for accessible information and wayfinding solutions, IAV aims to revolutionize mobility and freedom for the visually impaired. IAV’s smart assistant WonderVision helps the blind find their way around public places.

A world of possibilities through smartphones

In Germany alone, over 70,000 blind people and several hundred thousand visually impaired individuals face barriers in accessing public spaces. With the development of WonderVision, IAV harnesses its expertise in autonomous driving, artificial intelligence, and computer vision to create a system that assists visually impaired users in navigating busy indoor areas, such as train stations, airports, hospitals, or shopping malls.

“For both sighted and blind people, mobility is a key aspect of quality of life,” says Dr.-Ing. Ahmed Hussein. “As a technology provider, we also want to bring our expertise to other fields of application and, for example, make the environment more accessible to visually impaired people.”

Technical Highlights

-

Computer vision

WonderVision uses advanced computer vision techniques to recognize various directional indicators like signs, textual cues, and pictograms.

The system estimates distance and depth, filtering relevant navigation instructions and providing them to the user via voice output through a smartphone app.

-

AI-supported backend and cloud integration

IAV focuses on evaluating data in the AI-supported backend, aiming to integrate features from object and environment recognition into existing apps from mobility or service providers.

IAV’s Cloud Signaling Server seamlessly connects the customer front-end and backend, enabling scalable application of the WonderVision technology.

-

Unlimited potential for recognition features

With its flexible backend development, WonderVision can be adapted to serve diverse use cases and customer needs.

The technology offers virtually unlimited possibilities for adding additional features, such as destination navigation, location determination, person recognition, or product search in supermarkets.

-

Knowledge Transfer from Automotive Industry

IAV is using its methodological and technological know-how from the automotive industry to transfer it to the smartphone.

The application of WonderVision is purely digital and can be seamlessly integrated into existing apps, such as those of public transport airlines or even operators of event venues.

WonderVision has been developed in line with requirements by bringing in people with visual impairments, including from the German Association of the Blind, among others. By focusing on back-end development, IAV remains flexible to serve diverse use cases and different customers depending on their needs.

Empowering the visually impaired – one step at a time

IAV has successfully demonstrated proof of concept and aims to develop the WonderVision technology into a Minimum Viable Product (MVP) by 2023. By collaborating with visually impaired individuals, IAV ensures the system is developed in line with their specific requirements and preferences, ultimately enhancing their quality of life and independence.

Q&A

-

How does WonderVision work for visually impaired users?

WonderVision uses image recognition and depth estimation to detect directional indicators and provide relevant navigation instructions to the user via voice output on a smartphone app.

-

In which environments can WonderVision be used?

WonderVision is designed to assist visually impaired users in navigating busy indoor areas like train stations, airports, hospitals, and shopping malls. However, the technology has the potential to be adapted for other environments and use cases.

-

What are the future plans for WonderVision?

IAV aims to develop WonderVision into a Minimum Viable Product (MVP) by 2023 and integrate its features into existing apps of mobility or service providers, further enhancing accessibility for visually impaired users in public spaces

Do you have any questions?

Feel free to contact our project manager

Dr.-Ing. Ahmed Hussein

Project Manager

ahmed.hussein@iav.de